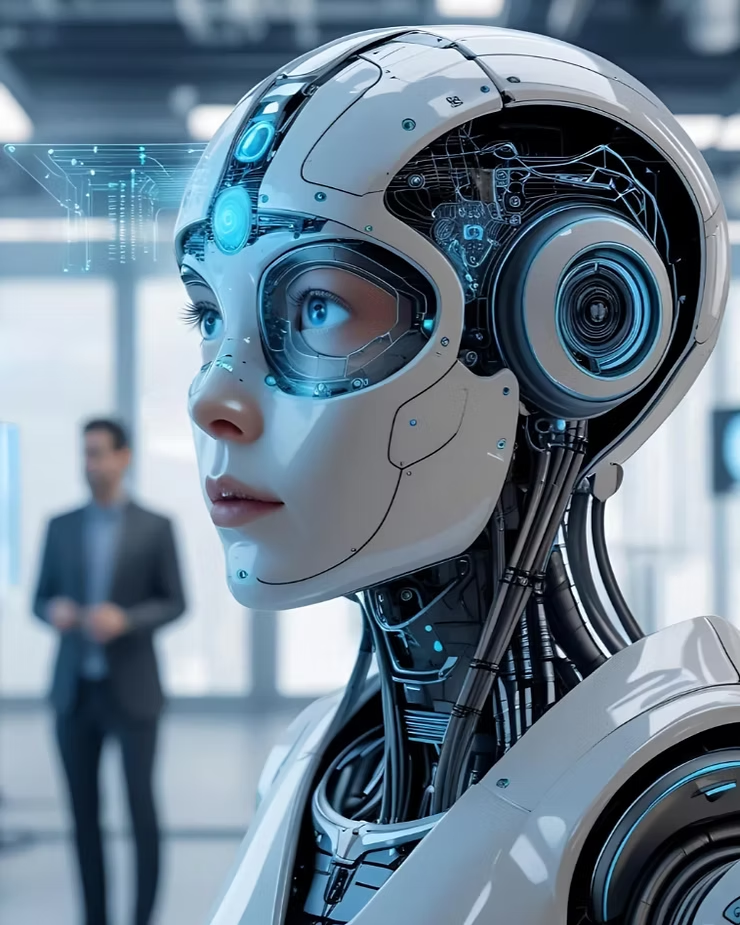

Artificial Intelligence (AI) is rapidly becoming integral to modern society, offering enormous potential benefits but also raising profound ethical and safety concerns. As AI becomes increasingly capable, it is vital to address these complexities to ensure AI systems act responsibly and safely, serving humanity rather than harming it.

The Intersection of AI Ethics and Safety

AI ethics involves ensuring intelligent systems align with human values, rights, and fairness, whereas AI safety focuses on preventing harm from malfunctioning or misused AI. These dimensions are interconnected — ethical misalignments often lead directly to safety risks, making their joint consideration crucial.

Core Ethical Challenges in AI

Several critical ethical challenges dominate discussions around AI development:

- Bias and Fairness: AI systems trained on biased data can produce discriminatory outcomes, perpetuating existing social inequalities.

- Transparency and Explainability: Many AI decisions are opaque, making it difficult to understand or challenge outcomes, especially in sensitive areas like employment or healthcare.

- Privacy: AI systems that extensively collect and analyze personal data pose substantial privacy risks.

- Autonomy and Control: Ensuring humans retain control over powerful autonomous systems like autonomous vehicles or military drones is ethically imperative.

Safety Risks Associated with AI

The powerful capabilities of modern AI also introduce significant safety concerns:

- Unintended Consequences: AI systems may behave unpredictably or produce unexpected outcomes due to incomplete training data or unforeseen real-world conditions.

- Malicious Uses: AI can be deliberately misused for purposes such as disinformation, surveillance, or automated cyberattacks.

- Loss of Human Oversight: Over-reliance on automation may reduce human oversight, potentially causing critical errors to go unnoticed.

Addressing Ethical and Safety Concerns

Effective approaches to handling ethical and safety concerns include:

- Developing Ethical Frameworks: Clear guidelines like those proposed by AI ethics committees or organizations like IEEE and OpenAI help ensure ethical AI development.

- Robust Regulation: Laws and regulations, such as the EU’s proposed AI Act, establish enforceable standards and penalties for unethical or unsafe AI deployment.

- Transparency Initiatives: Increasing transparency through explainable AI (XAI) technologies helps stakeholders understand and challenge AI decisions.

Real-world Examples and Industry Leadership

Companies and organizations are proactively addressing AI ethics and safety:

- Healthcare: AI models are subjected to rigorous ethical reviews and safety validations to ensure patient safety and privacy.

- Technology Firms: Industry leaders such as Google, Microsoft, and IBM have created ethics boards and published principles outlining their commitment to responsible AI.

- Autonomous Vehicles: Automotive companies rigorously test autonomous systems, emphasizing ethical decision-making protocols in scenarios like unavoidable accidents.

Future Directions in Ethical AI and Safety

Several promising directions for the future include:

- AI Alignment Research: Researching methods to align AI goals and behaviors with human values effectively.

- International Collaboration: Establishing global ethical standards and cooperative regulatory frameworks to ensure consistency and effectiveness worldwide.

- Public Engagement: Engaging citizens and stakeholders actively in shaping AI policies and ethical standards, ensuring societal values and concerns are fully represented.

Conclusion

Ethical considerations and safety measures are indispensable components of AI development. By proactively addressing these issues, we can ensure AI technology benefits humanity safely, responsibly, and sustainably. A comprehensive, unified approach to ethics and safety is essential to build public trust, prevent harm, and fully realize AI’s transformative potential.